分析目的

完成对什么样的人可能生存的分析。

分析步骤

1、数据分析

数据下载和加载

数据集下载地址:https://www.kaggle.com/c/titanic/data

数据说明

| 特征 |

描述 |

| survival |

生存 |

| pclass |

票类别 |

| sex |

性别 |

| Age |

年龄 |

| sibsp |

兄弟姐妹/配偶 |

| parch |

父母/孩子的数量 |

| ticket |

票号 |

| fare |

乘客票价 |

| cabin |

客舱号码 |

| embarked |

登船港口 |

1 |

|

2 | import numpy as np |

3 | import pandas as pd |

4 | import seaborn as sns |

5 | import matplotlib.pyplot as plt |

6 | %matplotlib inline |

1 | train = pd.read_csv("train.csv") |

2 | test = pd.read_csv("test.csv") |

3 |

|

4 | train.info() |

5 | print("-"*20) |

6 |

|

7 | train.head() |

1 | <class 'pandas.core.frame.DataFrame'> |

2 | RangeIndex: 891 entries, 0 to 890 |

3 | Data columns (total 12 columns): |

4 | PassengerId 891 non-null int64 |

5 | Survived 891 non-null int64 |

6 | Pclass 891 non-null int64 |

7 | Name 891 non-null object |

8 | Sex 891 non-null object |

9 | Age 714 non-null float64 |

10 | SibSp 891 non-null int64 |

11 | Parch 891 non-null int64 |

12 | Ticket 891 non-null object |

13 | Fare 891 non-null float64 |

14 | Cabin 204 non-null object |

15 | Embarked 889 non-null object |

16 | dtypes: float64(2), int64(5), object(5) |

17 | memory usage: 83.6+ KB |

18 | -------------------- |

19 | PassengerId Survived Pclass Name Sex Age SibSp Parch Ticket Fare Cabin Embarked |

20 | 0 1 0 3 Braund, Mr. Owen Harris male 22.0 1 0 A/5 21171 7.2500 NaN S |

21 | 1 2 1 1 Cumings, Mrs. John Bradley (Florence Briggs Th... female 38.0 1 0 PC 17599 71.2833 C85 C |

22 | 2 3 1 3 Heikkinen, Miss. Laina female 26.0 0 0 STON/O2. 3101282 7.9250 NaN S |

23 | 3 4 1 1 Futrelle, Mrs. Jacques Heath (Lily May Peel) female 35.0 1 0 113803 53.1000 C123 S |

24 | 4 5 0 3 Allen, Mr. William Henry male 35.0 0 0 373450 8.0500 NaN S |

特征分析

- 数值型变量之间的相关性

1 |

|

2 | train_corr = train.drop('PassengerId',axis=1).corr() |

3 | train_corr |

1 | Survived Pclass Age SibSp Parch Fare |

2 | Survived 1.000000 -0.338481 -0.077221 -0.035322 0.081629 0.257307 |

3 | Pclass -0.338481 1.000000 -0.369226 0.083081 0.018443 -0.549500 |

4 | Age -0.077221 -0.369226 1.000000 -0.308247 -0.189119 0.096067 |

5 | SibSp -0.035322 0.083081 -0.308247 1.000000 0.414838 0.159651 |

6 | Parch 0.081629 0.018443 -0.189119 0.414838 1.000000 0.216225 |

7 | Fare 0.257307 -0.549500 0.096067 0.159651 0.216225 1.000000 |

1 |

|

2 | fig = plt.subplots(figsize=(10,8)) |

3 | fig = sns.heatmap(train_corr, vmin=-1, vmax=1 , annot=True) |

- 分析每个变量与结果之间的关系

1 |

|

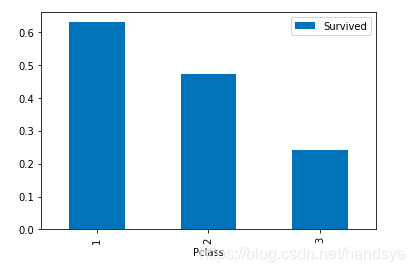

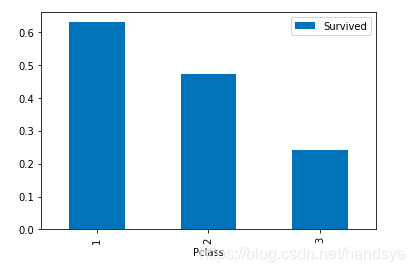

2 | train_p = train.groupby(['Pclass'])['Pclass','Survived'].mean() |

3 | train_p |

1 |

|

2 | Pclass Survived |

3 | Pclass |

4 | 1 1.0 0.629630 |

5 | 2 2.0 0.472826 |

6 | 3 3.0 0.242363 |

1 |

|

2 | train[['Pclass','Survived']].groupby(['Pclass']).mean().plot.bar() |

1 |

|

2 | train_s = train.groupby(['Sex'])['Sex','Survived'].mean() |

3 | train_s |

1 |

|

2 | Survived |

3 | Sex |

4 | female 0.742038 |

5 | male 0.188908 |

1 |

|

2 | train[['Sex','Survived']].groupby(['Sex']).mean().plot.bar() |

1 |

|

2 | train[['SibSp','Survived']].groupby(['SibSp']).mean() |

3 |

|

4 | train[['Parch','Survived']].groupby(['Parch']).mean() |

1 |

|

2 | Survived |

3 | SibSp |

4 | 0 0.345395 |

5 | 1 0.535885 |

6 | 2 0.464286 |

7 | 3 0.250000 |

8 | 4 0.166667 |

9 | 5 0.000000 |

10 | 8 0.000000 |

11 | Survived |

12 | Parch |

13 | 0 0.343658 |

14 | 1 0.550847 |

15 | 2 0.500000 |

16 | 3 0.600000 |

17 | 4 0.000000 |

18 | 5 0.200000 |

19 | 6 0.000000 |

1 |

|

2 |

|

3 | train_g = sns.FacetGrid(train, col='Survived',height=5) |

4 | train_g.map(plt.hist, 'Age', bins=40) |

1 | train.groupby(['Age'])['Survived'].mean().plot() |

1 |

|

2 |

|

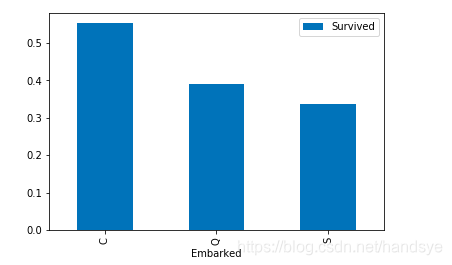

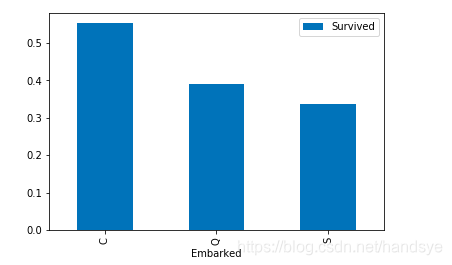

3 | train_e = train[['Embarked','Survived']].groupby(['Embarked']).mean().plot.bar() |

2、特征工程

1 |

|

2 |

|

3 | test['Survived'] = 0 |

4 |

|

5 | train_test = train.append(test,sort=False) |

6 |

|

1 |

|

2 | PassengerId Pclass Name Sex Age SibSp Parch Ticket Fare Cabin Embarked Survived |

3 | 0 892 3 Kelly, Mr. James male 34.5 0 0 330911 7.8292 NaN Q 0 |

4 | 1 893 3 Wilkes, Mrs. James (Ellen Needs) female 47.0 1 0 363272 7.0000 NaN S 0 |

5 | 2 894 2 Myles, Mr. Thomas Francis male 62.0 0 0 240276 9.6875 NaN Q 0 |

6 | 3 895 3 Wirz, Mr. Albert male 27.0 0 0 315154 8.6625 NaN S 0 |

7 | 4 896 3 Hirvonen, Mrs. Alexander (Helga E Lindqvist) female 22.0 1 1 3101298 12.2875 NaN S 0 |

特征处理

- Pclass,乘客等级,1是最高级

1 | fea1 = pd.get_dummies(train_test,columns=['Pclass']) |

2 | fea1.head() |

1 | Age Cabin Embarked Fare Name Parch PassengerId Sex SibSp Survived Ticket Pclass_1 Pclass_2 Pclass_3 |

2 | 0 22.0 NaN S 7.2500 Braund, Mr. Owen Harris 0 1 male 1 0 A/5 21171 0 0 1 |

3 | 1 38.0 C85 C 71.2833 Cumings, Mrs. John Bradley (Florence Briggs Th... 0 2 female 1 1 PC 17599 1 0 0 |

4 | 2 26.0 NaN S 7.9250 Heikkinen, Miss. Laina 0 3 female 0 1 STON/O2. 3101282 0 0 1 |

5 | 3 35.0 C123 S 53.1000 Futrelle, Mrs. Jacques Heath (Lily May Peel) 0 4 female 1 1 113803 1 0 0 |

6 | 4 35.0 NaN S 8.0500 Allen, Mr. William Henry 0 5 male 0 0 373450 0 0 1 |

- Sex,性别没有缺失值,直接分列

1 | fea2 = pd.get_dummies(fea1,columns=["Sex"]) |

2 | fea2.head() |

1 | Age Cabin Embarked Fare Name Parch PassengerId SibSp Survived Ticket Pclass_1 Pclass_2 Pclass_3 Sex_female Sex_male |

2 | 0 22.0 NaN S 7.2500 Braund, Mr. Owen Harris 0 1 1 0 A/5 21171 0 0 1 0 1 |

3 | 1 38.0 C85 C 71.2833 Cumings, Mrs. John Bradley (Florence Briggs Th... 0 2 1 1 PC 17599 1 0 0 1 0 |

4 | 2 26.0 NaN S 7.9250 Heikkinen, Miss. Laina 0 3 0 1 STON/O2. 3101282 0 0 1 1 0 |

5 | 3 35.0 C123 S 53.1000 Futrelle, Mrs. Jacques Heath (Lily May Peel) 0 4 1 1 113803 1 0 0 1 0 |

6 | 4 35.0 NaN S 8.0500 Allen, Mr. William Henry 0 5 0 0 373450 0 0 1 0 1 |

- SibSp and Parch 兄妹配偶数/父母子女数

1 | train_test['SibSp_Parch'] = train_test['SibSp'] + train_test['Parch'] |

2 | train_test = pd.get_dummies(train_test,columns = ['SibSp','Parch','SibSp_Parch']) |

- Embarked 数据有极少量(3个)缺失值,但是在分列的时候,缺失值的所有列可以均为0,所以可以考虑不填充.

另外,也可以考虑用测试集众数来填充.先找出众数,再采用df.fillna()方法

1 | train_test = pd.get_dummies(train_test,columns=["Embarked"]) |

- name

1.在数据的Name项中包含了对该乘客的称呼,将这些关键词提取出来,然后做分列处理.1 |

|

2 | train_test['Name1'] = train_test['Name'].str.extract('.+,(.+)', expand=False).str.extract('^(.+?)\.', expand=False).str.strip() |

3 |

|

4 | train_test['Name1'].replace(['Capt', 'Col', 'Major', 'Dr', 'Rev'], 'Officer' , inplace = True) |

5 | train_test['Name1'].replace(['Jonkheer', 'Don', 'Sir', 'the Countess', 'Dona', 'Lady'], 'Royalty' , inplace = True) |

6 | train_test['Name1'].replace(['Mme', 'Ms', 'Mrs'], 'Mrs') |

7 | train_test['Name1'].replace(['Mlle', 'Miss'], 'Miss') |

8 | train_test['Name1'].replace(['Mr'], 'Mr' , inplace = True) |

9 | train_test['Name1'].replace(['Master'], 'Master' , inplace = True) |

10 |

|

11 | train_test = pd.get_dummies(train_test,columns=['Name1']) |

1 |

|

2 | train_test['Name2'] = train_test['Name'].apply(lambda x: x.split('.')[1]) |

3 |

|

4 | Name2_sum = train_test['Name2'].value_counts().reset_index() |

5 | Name2_sum.columns=['Name2','Name2_sum'] |

6 | train_test = pd.merge(train_test,Name2_sum,how='left',on='Name2') |

7 |

|

8 | train_test.loc[train_test['Name2_sum'] == 1 , 'Name2_new'] = 'one' |

9 | train_test.loc[train_test['Name2_sum'] > 1 , 'Name2_new'] = train_test['Name2'] |

10 | del train_test['Name2'] |

11 |

|

12 | train_test = pd.get_dummies(train_test,columns=['Name2_new']) |

13 |

|

14 | del train_test['Name'] |

- fare 该特征有缺失值,先找出缺失值的那调数据,然后用平均数填充

1 |

|

2 | train_test.loc[train_test["Fare"].isnull()] |

3 |

|

4 | train.groupby(by=["Pclass","Embarked"]).Fare.mean() |

5 | Pclass Embarked |

6 | 1 C 104.718529 |

7 | Q 90.000000 |

8 | S 70.364862 |

9 | 2 C 25.358335 |

10 | Q 12.350000 |

11 | S 20.327439 |

12 | 3 C 11.214083 |

13 | Q 11.183393 |

14 | S 14.644083 |

15 | Name: Fare, dtype: float64 |

16 |

|

17 | train_test["Fare"].fillna(14.435422,inplace=True) |

- Ticket该列和名字做类似的处理,先提取,然后分列

1 |

|

2 |

|

3 | train_test['Ticket_Letter'] = train_test['Ticket'].str.split().str[0] |

4 | train_test['Ticket_Letter'] = train_test['Ticket_Letter'].apply(lambda x:np.nan if x.isnumeric() else x) |

5 | train_test.drop('Ticket',inplace=True,axis=1) |

6 |

|

7 | train_test = pd.get_dummies(train_test,columns=['Ticket_Letter'],drop_first=True) |

- Age

1.该列有大量缺失值,考虑用一个回归模型进行填充.

2.在模型修改的时候,考虑到年龄缺失值可能影响死亡情况,用年龄是否缺失值来构造新特征

1 | """这是模型就好后回来增加的新特征 |

2 | 考虑年龄缺失值可能影响死亡情况,数据表明,年龄缺失的死亡率为0.19.""" |

3 | train_test.loc[train_test["Age"].isnull()]['Survived'].mean() |

4 | 0.19771863117870722 |

5 |

|

6 | train_test.loc[train_test["Age"].isnull() ,"age_nan"] = 1 |

7 | train_test.loc[train_test["Age"].notnull() ,"age_nan"] = 0 |

8 | train_test = pd.get_dummies(train_test,columns=['age_nan']) |

利用其他组特征量,采用机器学习算法来预测Age

1 | train_test.info() |

2 | <class 'pandas.core.frame.DataFrame'> |

3 | Int64Index: 1309 entries, 0 to 1308 |

4 | Columns: 187 entries, Age to age_nan_1.0 |

5 | dtypes: float64(2), int64(3), object(1), uint8(181) |

6 | memory usage: 343.0+ KB |

7 |

|

8 | missing_age = train_test.drop(['Survived','Cabin'],axis=1) |

9 |

|

10 | missing_age_train = missing_age[missing_age['Age'].notnull()] |

11 | missing_age_test = missing_age[missing_age['Age'].isnull()] |

12 |

|

13 | missing_age_X_train = missing_age_train.drop(['Age'], axis=1) |

14 | missing_age_Y_train = missing_age_train['Age'] |

15 | missing_age_X_test = missing_age_test.drop(['Age'], axis=1) |

16 |

|

17 | from sklearn.preprocessing import StandardScaler |

18 | ss = StandardScaler() |

19 |

|

20 | ss.fit(missing_age_X_train) |

21 | missing_age_X_train = ss.transform(missing_age_X_train) |

22 | missing_age_X_test = ss.transform(missing_age_X_test) |

23 |

|

24 | from sklearn import linear_model |

25 | lin = linear_model.BayesianRidge() |

26 | lin.fit(missing_age_X_train,missing_age_Y_train) |

27 | BayesianRidge(alpha_1=1e-06, alpha_2=1e-06, compute_score=False, copy_X=True, |

28 | fit_intercept=True, lambda_1=1e-06, lambda_2=1e-06, n_iter=300, |

29 | normalize=False, tol=0.001, verbose=False) |

30 |

|

31 | train_test.loc[(train_test['Age'].isnull()), 'Age'] = lin.predict(missing_age_X_test) |

32 |

|

33 | train_test['Age'] = pd.cut(train_test['Age'], bins=[0,10,18,30,50,100],labels=[1,2,3,4,5]) |

34 | train_test = pd.get_dummies(train_test,columns=['Age']) |

- Cabin

cabin项缺失太多,只能将有无Cain首字母进行分类,缺失值为一类,作为特征值进行建模,也可以考虑直接舍去该特征 cabin项缺失太多,只能将有无Cain首字母进行分类,缺失值为一类,作为特征值进行建模,也可以考虑直接舍去该特征

1 |

|

2 | train_test['Cabin_nan'] = train_test['Cabin'].apply(lambda x:str(x)[0] if pd.notnull(x) else x) |

3 | train_test = pd.get_dummies(train_test,columns=['Cabin_nan']) |

4 |

|

5 | train_test.loc[train_test["Cabin"].isnull() ,"Cabin_nan"] = 1 |

6 | train_test.loc[train_test["Cabin"].notnull() ,"Cabin_nan"] = 0 |

7 | train_test = pd.get_dummies(train_test,columns=['Cabin_nan']) |

8 | train_test.drop('Cabin',axis=1,inplace=True) |

- 特征工程处理完了,划分数据集

1 | train_data = train_test[:891] |

2 | test_data = train_test[891:] |

3 | train_data_X = train_data.drop(['Survived'],axis=1) |

4 | train_data_Y = train_data['Survived'] |

5 | test_data_X = test_data.drop(['Survived'],axis=1) |

数据规约

- 线性模型需要用标准化的数据建模,而树类模型不需要标准化的数据

- 处理标准化的时候,注意将测试集的数据transform到test集上

1 | from sklearn.preprocessing import StandardScaler |

2 | ss2 = StandardScaler() |

3 | ss2.fit(train_data_X) |

4 | train_data_X_sd = ss2.transform(train_data_X) |

5 | test_data_X_sd = ss2.transform(test_data_X) |

3、建立模型

模型发现

- 可选单个模型模型有随机森林,逻辑回归,svm,xgboost,gbdt等.

- 也可以将多个模型组合起来,进行模型融合,比如voting,stacking等方法

- 好的特征决定模型上限,好的模型和参数可以无线逼近上限.

- 我测试了多种模型,模型结果最高的随机森林,最高有0.8.

构建模型

随机森林

1 | from sklearn.ensemble import RandomForestClassifier |

2 | rf = RandomForestClassifier(n_estimators=150,min_samples_leaf=3,max_depth=6,oob_score=True) |

3 | rf.fit(train_data_X,train_data_Y) |

4 | test["Survived"] = rf.predict(test_data_X) |

5 | RF = test[['PassengerId','Survived']].set_index('PassengerId') |

6 | RF.to_csv('RF.csv') |

7 |

|

8 |

|

9 | from sklearn.externals import joblib |

10 | joblib.dump(rf, 'rf10.pkl') |

LogisticRegression

1 | from sklearn.linear_model import LogisticRegression |

2 | from sklearn.grid_search import GridSearchCV |

3 | lr = LogisticRegression() |

4 | param = {'C':[0.001,0.01,0.1,1,10], "max_iter":[100,250]} |

5 | clf = GridSearchCV(lr, param,cv=5, n_jobs=-1, verbose=1, scoring="roc_auc") |

6 | clf.fit(train_data_X_sd, train_data_Y) |

7 |

|

8 | clf.grid_scores_ |

9 |

|

10 | clf.best_params_ |

11 |

|

12 | lr = LogisticRegression(clf.best_params_) |

13 | lr.fit(train_data_X_sd, train_data_Y) |

14 |

|

15 | test["Survived"] = lr.predict(test_data_X_sd) |

16 | test[['PassengerId', 'Survived']].set_index('PassengerId').to_csv('LS5.csv') |

SVM

1 | from sklearn import svm |

2 | svc = svm.SVC() |

3 | clf = GridSearchCV(svc,param,cv=5,n_jobs=-1,verbose=1,scoring="roc_auc") |

4 | clf.fit(train_data_X_sd,train_data_Y) |

5 | clf.best_params_ |

6 | svc = svm.SVC(C=1,max_iter=250) |

7 |

|

8 |

|

9 | svc.fit(train_data_X_sd,train_data_Y) |

10 | svc.predict(test_data_X_sd) |

11 |

|

12 |

|

13 | test["Survived"] = svc.predict(test_data_X_sd) |

14 | SVM = test[['PassengerId','Survived']].set_index('PassengerId') |

15 | SVM.to_csv('svm1.csv') |

GBDT

1 | from sklearn.ensemble import GradientBoostingClassifier |

2 | gbdt = GradientBoostingClassifier(learning_rate=0.7,max_depth=6,n_estimators=100,min_samples_leaf=2) |

3 | gbdt.fit(train_data_X,train_data_Y) |

4 | test["Survived"] = gbdt.predict(test_data_X) |

5 | test[['PassengerId','Survived']].set_index('PassengerId').to_csv('gbdt3.csv') |

xgboost

1 | import xgboost as xgb |

2 | xgb_model = xgb.XGBClassifier(n_estimators=150,min_samples_leaf=3,max_depth=6) |

3 | xgb_model.fit(train_data_X,train_data_Y) |

4 | test["Survived"] = xgb_model.predict(test_data_X) |

5 | XGB = test[['PassengerId','Survived']].set_index('PassengerId') |

6 | XGB.to_csv('XGB5.csv') |

4、建立模型

模型融合 voting

1 | from sklearn.ensemble import VotingClassifier |

2 | from sklearn.linear_model import LogisticRegression |

3 | lr = LogisticRegression(C=0.1,max_iter=100) |

4 | import xgboost as xgb |

5 | xgb_model = xgb.XGBClassifier(max_depth=6,min_samples_leaf=2,n_estimators=100,num_round = 5) |

6 | from sklearn.ensemble import RandomForestClassifier |

7 | rf = RandomForestClassifier(n_estimators=200,min_samples_leaf=2,max_depth=6,oob_score=True) |

8 | from sklearn.ensemble import GradientBoostingClassifier |

9 | gbdt = GradientBoostingClassifier(learning_rate=0.1,min_samples_leaf=2,max_depth=6,n_estimators=100) |

10 | vot = VotingClassifier(estimators=[('lr', lr), ('rf', rf),('gbdt',gbdt),('xgb',xgb_model)], voting='hard') |

11 | vot.fit(train_data_X_sd,train_data_Y) |

12 | test["Survived"] = vot.predict(test_data_X_sd) |

13 | test[['PassengerId','Survived']].set_index('PassengerId').to_csv('vot5.csv') |

模型融合 stacking

1 |

|

2 | X = train_data_X_sd |

3 | X_predict = test_data_X_sd |

4 | y = train_data_Y |

5 |

|

6 | '''模型融合中使用到的各个单模型''' |

7 | from sklearn.linear_model import LogisticRegression |

8 | from sklearn import svm |

9 | import xgboost as xgb |

10 | from sklearn.ensemble import RandomForestClassifier |

11 | from sklearn.ensemble import GradientBoostingClassifier |

12 | clfs = [LogisticRegression(C=0.1,max_iter=100), |

13 | xgb.XGBClassifier(max_depth=6,n_estimators=100,num_round = 5), |

14 | RandomForestClassifier(n_estimators=100,max_depth=6,oob_score=True), |

15 | GradientBoostingClassifier(learning_rate=0.3,max_depth=6,n_estimators=100)] |

16 |

|

17 | from sklearn.cross_validation import StratifiedKFold |

18 | n_folds = 5 |

19 | skf = list(StratifiedKFold(y, n_folds)) |

20 |

|

21 | dataset_blend_train = np.zeros((X.shape[0], len(clfs))) |

22 | dataset_blend_test = np.zeros((X_predict.shape[0], len(clfs))) |

23 |

|

24 | for j, clf in enumerate(clfs): |

25 | '''依次训练各个单模型''' |

26 | |

27 | dataset_blend_test_j = np.zeros((X_predict.shape[0], len(skf))) |

28 | for i, (train, test) in enumerate(skf): |

29 | '''使用第i个部分作为预测,剩余的部分来训练模型,获得其预测的输出作为第i部分的新特征。''' |

30 | |

31 | X_train, y_train, X_test, y_test = X[train], y[train], X[test], y[test] |

32 | clf.fit(X_train, y_train) |

33 | y_submission = clf.predict_proba(X_test)[:, 1] |

34 | dataset_blend_train[test, j] = y_submission |

35 | dataset_blend_test_j[:, i] = clf.predict_proba(X_predict)[:, 1] |

36 | '''对于测试集,直接用这k个模型的预测值均值作为新的特征。''' |

37 | dataset_blend_test[:, j] = dataset_blend_test_j.mean(1) |

38 |

|

39 | clf2 = LogisticRegression(C=0.1,max_iter=100) |

40 | clf2.fit(dataset_blend_train, y) |

41 | y_submission = clf2.predict_proba(dataset_blend_test)[:, 1] |

42 | test = pd.read_csv("test.csv") |

43 | test["Survived"] = clf2.predict(dataset_blend_test) |

44 | test[['PassengerId','Survived']].set_index('PassengerId').to_csv('stack3.csv') |